Monitoring

The "Monitoring" tab in the View AI provides a centralized location for observing the performance and outputs of your deployed models.

Real Time Inference: Allows for the immediate processing of incoming data through the model to generate predictions on-the-fly.

Batch Inference: Users can upload test datasets to evaluate the model on new data in a batch process.

Data Drift and Model Drift: This helps in assessing the model’s generalizability and performance on unseen data.

Drift Metrics

Jensen–Shannon distance (JSD)

A distance metric calculated between the distribution of a field in the baseline dataset and that same distribution for the time period of interest. Learn more.

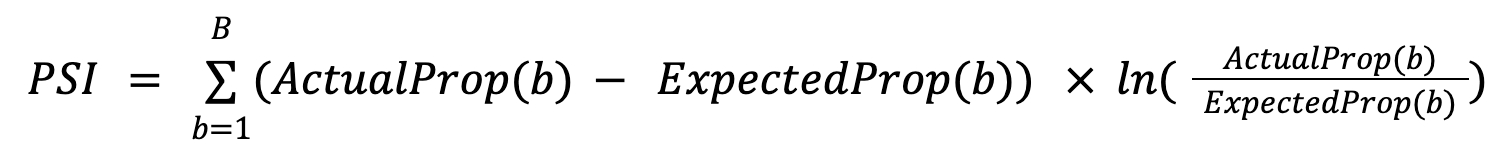

Population Stability Index

A drift metric based on the multinomial classification of a variable into bins or categories. The differences in each bin between the baseline and the time period of interest are then utilized to calculate it as follows:

In the Data Drift table, you can select a feature to see the feature distribution for both the time period under consideration and the baseline dataset.

Average Values – The mean of a field (feature or prediction) over time.

Feature Drift: Drift of the feature, calculated using the drift metric of choice.

How to interpret the predictions?

Local Explanations

A local explanation chart shows how individual features contribute to a specific prediction made by a machine learning model. It breaks down the model's decision-making process for a particular instance, highlighting the influence of each feature.

On the y-axis, you'll find a list of features. These are the variables that the model considered when making its prediction. The x-axis has the contribution bars. They indicate the contribution of each feature to the final prediction.

Positive Contributions: Bars extending to the right (in orange) indicate features that push the prediction towards the predicted class.

Negative Contributions: Bars extending to the left (in blue) indicate features that push the prediction away from the predicted class.

Intercept (Baseline): This represents the starting point of the prediction before considering any features. It's the baseline probability of the predicted class.

What steps should I take based on this information?

Understanding Specific Decisions: Local explanations clarify why a model made a particular decision in real-time, For instance, if a loan application is denied by an automated system, a local explanation can provide the reasons for this decision based on the applicant's specific data points.

Identifying Data Issues: Local explanations can highlight data labeling errors, outliers, or biases, indicating areas for data collection or feature engineering improvements.

Improving Model Accuracy: Analyzing feature impacts on predictions allows targeted adjustments to enhance model performance. For example, if certain features consistently lead to incorrect predictions, they might need to be re-engineered, or the model may require additional training data that better captures the diversity of real-world scenarios.

Iterative Refinement: Immediate feedback from local explanations helps refine models during tuning, focusing on problematic instances for better future outcomes.

Next Steps:

Model Performance Issues: Model performance can be affected by a range of factors, including errors in the data pipeline, substantial changes in data patterns due to external events, evolving user behaviour, or inaccuracies in data collection and preprocessing. Proactively identifying and resolving these issues is vital for ensuring model accuracy, reliability, and overall effectiveness.

Use the Analyze tab to drill down into the data and gain further insights.

↪ Questions? Chat with an AI or talk to a product expert.

Last updated

Was this helpful?